Difference between revisions of "Simulator Structure"

| Line 8: | Line 8: | ||

While this definition focuses on biological phenomena and emergence (\cp [p 5,Sipper1995]), A-Life simulations are used for agent based models and evaluation of control architectures as well (see Section \ref{sec:stota:alife:relatedprojects}). Based on the principles listed in the definition, the testbed for the use-cases defined above is described in detail below. | While this definition focuses on biological phenomena and emergence (\cp [p 5,Sipper1995]), A-Life simulations are used for agent based models and evaluation of control architectures as well (see Section \ref{sec:stota:alife:relatedprojects}). Based on the principles listed in the definition, the testbed for the use-cases defined above is described in detail below. | ||

| − | |||

== Concept == | == Concept == | ||

Revision as of 13:48, 5 November 2013

This text is take from Chapter 4 of the PhD "Human Bionically Inspired Decision Unit" written by Tobias Deutsch. Some parts of the text might be outdated, but the overall concept is valid.

A platform is a software framework which makes it possible to execute a piece of software. In the context of this work, the platform is an A-Life simulator where different control architectures can be tested. The definition of A-Life coined by Langton is:

Artificial Life is a field of study devoted to understanding life by attempting to abstract the fundamental dynamical principles underlying biological phenomena, and recreating these dynamics in other physical media - such as computers - making them accessible to new kinds of experimental manipulation and testing [p xiv, Langton1991].

While this definition focuses on biological phenomena and emergence (\cp [p 5,Sipper1995]), A-Life simulations are used for agent based models and evaluation of control architectures as well (see Section \ref{sec:stota:alife:relatedprojects}). Based on the principles listed in the definition, the testbed for the use-cases defined above is described in detail below.

Concept

The basic idea for this simulator is to create a world in which a special type of agents is put to test by exposing them to special setups. For this, the world has to offer an environment consisting of different objects and other agents. The objects range from pure obstacles and landmarks like a special pole or a wall to small stones which could be used as tools. A special type of object is energy source. Inspired by food like grass or bushes, these sources provide nutrition for the agents and can be removed from the world by consuming them. Some of them regrow, providing a constant source of food. The agents resemble something like animals. Equipped with more or less complex control architectures, they provide nutrition, are things to play with (like a pet), sources of danger (in case they are fearsome hunters), and other interaction possibilities. The special type of agents - named ARSIN <ref> A single agent is called ARSIN, the plural of it is ARSINI. ARSINO refers to a single male agent and ARSINA to a single female agent.</ref> - is used for the control architectures which should be evaluated. Their body consists of various sensors, actuators, and internal mechanisms.

The implementation of the simulator is based on the development toolkit MASON version 13 [Mason10] (see Section \ref{sec:stota:alife:simplatforms}). The world is two-dimensional and projected onto the surface of a torus. Thus, the x-axis end points are connected, as well as the y-axis end points. This results in a ring-shaped world enabling the agents to move unbounded. As all objects and agents within the world are two-dimensional, a fully featured physics engine like ODE [ODE11] cannot be used. These types of physics engines require a three-dimensional world. Thus, a simpler, 2D rigid body physics engine [MasonPhysics11] developed for MASON is used.

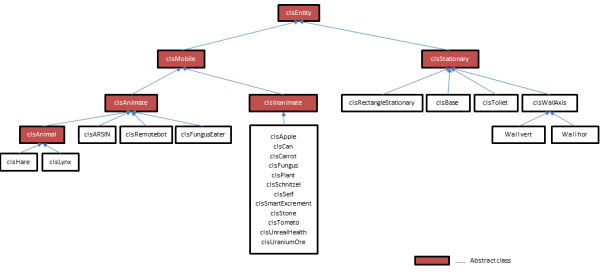

As shown in Figure \ref{fig:platform:arsin:entityhierarchy}((Details of all UML diagrams in this chapter are omitted to not occlude the diagrams. The used UML notations are explained in Appendix \ref{app:uml}.)), every object, animal, or agent is designed to be a specialization of the //Entity// class. The rigid body physics engine knows two types of objects: stationary and mobile ones. This is modeled by the first specializations of class //Entity//: //MobileEntity// and //StationaryEntity//. Each of them implements one of the two interfaces to the physics engine (<<interface>> Stationary//, respectively <<interface>> Mobile//. Stationary entities like Landmark, Wall, and LargeStone are of infinite weight and can never be moved throughout the whole simulation. This is different for mobile entities, their weight is limited and they have a friction coefficient. Objects of type //InAnimate// can be moved around, but they can never set any action. Objects of this group are not only things like Stone or Can. Additionally, energy sources which are removed from the world after they have been consumed like Cake belong to it. Although objects of type Plant cannot move by themselves nor can they be moved by others, they are a sub-class of //Animate//. The reasons for this design decision are that plants can regrow and are thus not inanimate, and that plants can be picked up and carried around which makes them mobile. If a plant can be carried depends on its weight and the abilities of the agent trying to perform this action. Thus, it makes no difference if very large - in fact stationary - entities like huge trees are part of mobile or stationary entities. The class Animal as well as the class ARSIN Agent implement the interface Body which is used to instantiate more or less rich bodies for the agents. Additionally, class ARSIN Agent implements the interface DecisionUnit. This provides the framework to use different control architectures for this entity. All entities which are consumable - independent of the consequence for the agent eating them - have to implement the <<interface>> Flesh.

Neither entities of type Plant nor of type Animal are modeled to be close to their counterparts in nature. Moreover, they are rough concepts used to guide further development. Plants refer to stationary objects which have a growth life cycle and can usually be consumed. Animals refer to mobile objects which move around, might need to consume food, and have a simple control attached.

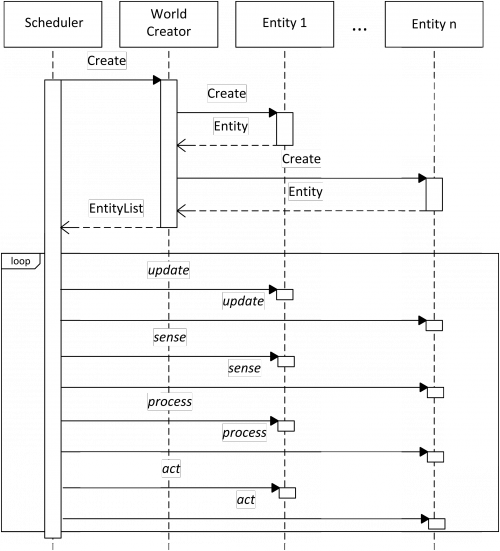

Each specialization of class //Entity// has to implement the following four public methods: //update//, //sense//, //process//, and //act//. Within each simulation step, all four methods are called such that all entities do one of these sub-steps at the same time. The diagram shown in Figure \ref{fig:platform:arsin:simsequence}((The calls of the entity functions //update//, //sense//, //process//, and //act// are shown simplified.)) depicts this control sequence. Before the first simulation cycle can be performed, the world and all its entities have to be created. The class WorldCreator is responsible for this act. Each entity has access to the list of all existing entities. This is necessary to perform the sensing step. Within the simulation step loop, the first action performed by the entities is //update//: Plants update their growth cycle information, animals "digest" consumed nutrition, and ARSIN Agents update their rich internal system. The next sub-step is execution of the method //sense//. All entities which have sensors update their world information by accessing data of entities which are within range of their various sensors. After sensing, all entities execute their //process// method which calls their control algorithms to decide which actions should be selected for execution. Finally, by calling the method //act//, all agents execute their actions and thereafter change the state of the world. The reason for dividing the simulation step into these four sub-steps is to ensure that all agents base their decisions on the same world state. This approach avoids favoritism of the agent which is first in the list of entities. Now, a conflict occurs when two agents decide to consume the same energy source at the same time. Without the sub-steps, the first in the list would consume it and the other one would be punished for trying to consume a non-existing source. This has negative impact for example in case of negative feedback learning. Another advantage of this approach is that within one sub-step, the method calls can be parallelized. For the entities, three of the four sub-steps take zero time. Only the last sub-step - acting - is perceived by the entities to take time as it alters the state of the world. Many of the entity types do not need any of the four methods. A stone has no internal states, no sensors, no actuators, and definitely no control architecture. The reason for the design decision to force all entities to implement these methods is to enable some programmable behavior for all entities. For example, a lighthouse added as a special type of landmark could turn its light on and off by implementing the code into method //process//.

The whole project is divided into seven sub-projects (see Figure \ref{fig:platform:arsin:packages}((Details are omitted not to occlude the diagram. The sub-project at the origin of an arrow accesses classes which are contained by the sub-project the arrow points at.))). The reason for this design decision is to keep the various sub-projects as independent from each other as possible. The control architectures which are part of sub-project DecisionUnit are totally independent from the multi-agent framework in sub-project MASON. The main sub-project is Simulator. It is responsible for world creation and contains the scheduler described above. Each entity is created with its optional decision unit. How an entity is defined in detail is implemented in sub-project World. It contains things like the sensors, the bodies, interaction possibilities, and utilizes the two interfaces to the physics engine provided by Physics. In sub-project DUInterface, common classes needed for the decision unit framework are implemented. MASON provides several inspectors to view internal states of the agents. Body and entity related inspectors are stored in World. The ones necessary to view internal states of a decision unit are stored in DUInspectors. With this design, all three main parts of the simulation - the multi-agent simulation framework, the ARSIN world, and the decision units - can be exchange without the need of changing much in the other sub-projects.

Each parameter of an entity and all other parameters of the simulation can be configured using external config files. This enables to generate different setups for the use-cases and to test the influence of various parameters on the personality of the ARSIN Agents.

After this rough overview of the ARSIN world simulation, the three types of mobile and animate entities are described in more detail in the next two subsections.

Animals, Plants, and Energy Sources

Alternatively to the categorization of the entities given by the class hierarchy depicted in Figure \ref{fig:platform:arsin:entityhierarchy}, the entities can be divided into "part of food cycle" and "not part of it." Stones, landmarks, and cans are definitely not edible and thus part of the latter group. All entities which implement the Flesh interface are part of the first group.

Animate entities like animals and plants as well as inanimate entities like cakes can be consumed. The reason why food is used equivalent with energy source in this work is in the nature of keeping the A-Life simulation as open as possible. Thus, an electric power recharge station would be modeled similarly to a plant. //A// special nutrition type would have to be added which is only available at this station. All this is done by implementing the Flesh interface. It contains one method which returns two values: First, a list of the different nutrition fractions per unit the flesh is made of. Second, the amount of flesh withdrawn from the entity is returned. Thus if entity //A// tries to consume entity //B//, "flesh" or nutrition is removed from //B// and added to the "stomach" of //A//. If the retrieved nutrition is nourishing or poisonous has to be decided by the internal systems of entity //A//.

The fate of entity //B// in case all of its flesh is removed has to be decided by the controller of entity //B//. Within the update phase of a simulation step, entities can define what happens to their "flesh." In case of animals, the "wound" generated by the missing "flesh" could heal; plants could regrow their "flesh." Another possibility could be that they "die."

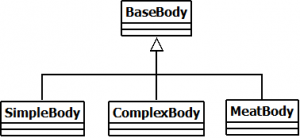

The entity types Animal and ARSIN Agent implement the interface Body. Figure \ref{fig:platform:arsin:body} shows the list of available bodies which can be used with this interface. All three are specializations of the abstract class //AbstractBody//. The body of type Meat consists only of "flesh." It is used for special purpose entities which do not need any further sensors and actuators or bypass the regular interfaces to the MASON framework. The Simple body contains some sensors and actuators to enable animals to interact with the environment. The internal systems available with the third body type are bypassed by a very simple energy system. If fitting food is consumed, energy is increased. If not or if injured, energy is reduced. In case the energy level reaches zero, the entity dies. The third type - class Rich - consists of an internal system as well as sensors and actuators for the internal system and the environment. This body is explained in the next section in more detail.

The simple body is capable of performing five actions, has five external, and two internal sensors. The external sensors are:

* [Acoustic:] Sounds emitted by entities within a predefined distance are perceived by this sensor. Additionally, depending on the distance details can be omitted. For example, far away sounds cannot be deciphered, only the information that a noise occurred is perceived. * [Bump:] The force and the body part which has been hit by another entity are perceived. * [Eatable Area:] Right in front of the agent is a small area. Entities which are within it can be eaten. This sensor returns a list of entities within its borders. * [Olfactoric:] An entity can smell the presence of other entities. The closer they are, the more intense they smell. Odors of one type are added to a single value. Thus, depending on the result of the olfactory sensor alone the exact number of nearby entities of one type cannot be determined. * [Vision:] This sensor returns information on all entities which are within the field-of-view. At the current stage of implementation, no difference between hidden and non-hidden entities is made. Similarly to the acoustic sensor, the amount of information received decreases with the distance of the observed entity.

The second group of sensors perceives information from inner body processes like available energy. The simple body contains two internal sensors:

* [Energy:] The available energy of the entity is returned by this sensor. * [Energy Consumption:] Returns the rate at which the energy is reduced by the performed actions.

Every action performed costs energy. Even if no action is executed, "life" itself has a need for energy. Each action can be executed at different intensities resulting in different energy demands. A detailed explanation of the action command system in the simulator can be found at [Doenz2009]. The five actions which can be executed by the simple body are:

* [Move:] Move forward or backward with a given force. The stronger the force, the faster the entity moves. The resulting speed depends on the floor friction and on the entities' own weight. As long as the applied force is stronger than the counter force defined by the friction and the weight, the entity moves. If no or not enough force is applied, the entity stops after a while. The energy demand is directly proportional to the applied force. * [Turn:] This actuator operates similarly to the move actuator. As long as enough force is applied, the entity turns left or right. Again, the energy demand is directly proportional to the applied force. * [Eat:] Consume an energy source which is located within the range defined by the eatable area sensor. The eat action's energy demand is a constant value withdrawn from the available energy as long as it is performed. * [Attack Bite:] This is the first of the two aggressive actions available. Before a prey can be consumed it has to be hunted down. Or, nearby enemies can be bitten to either drive them off or to even kill them. To bite an entity it has to be within the eatable area. In the current implementation, the bite action's energy demand is constant for each bite performed. * [Attack Lightning:] This is a long-range weapon. It can be used to attack prey or enemies that are within sight. Other than the bite attack, the strength of a lightning attack can be controlled. The energy demand is directly proportional to the strength of the emitted lightning strike.

Next the ARSIN agent is described. It differs from the entities already introduced as so far that more sensors and actuators are available and that the body contains rich internal systems.

ARSIN Agent

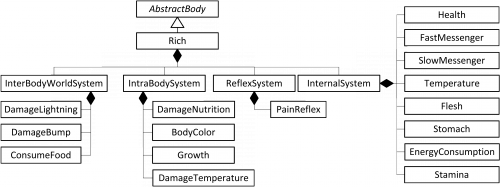

The ARSIN Agent is an entity which offers the most internal systems, sensors, and actuators in ARSIN world. It resembles the "vehicle" to test various AGI control architectures in this simulator. Figure \ref{fig:platform:arsin:richbody} shows the four internal components which are introduced with the rich body: IntraBodySystem, ReflexSystem, InterBodyWorldSystem, and InternalSystem. The ReflexSystem is executed during //process// phase, while the other three are executed during //update// phase. Functionality provided by InterBodyWorldSystem is invoked by external functions asynchronously.

The ReflexSystem deals with instant, bodily reactions to sensor stimuli. For example, if the agent is bumped and the force inflicted is strong enough to be perceived as painful, a sound meaning "ouch" could be emitted (implemented in class PainReflex).

Class InternalSystem operates as facade for all systems which deal with body internal concepts like digestion, communication between internal systems, and health. Stomach processes consumed food and calculates the potentially available energy. From this potential energy, EnergyConsumption withdraws the amount of energy needed to perform all actions and to operate all internal systems. Analogous to the energy demand of the actuators described in the previous subsection, internal systems need energy to operate too. All these elements register their current energy demand in EnergyConsumption. A second type of energy resource is stamina. Each action which withdraws energy needs also stamina. Class Stamina processes these demands. As long as sufficient stamina is available, all actions can be executed. Otherwise, the agent has to rest. Stamina restores itself at a configurable rate. Usually, stamina is much faster exhausted and refilled than energy. Flesh defines which nutrition the agent is built of and its basic weight. The total weight is the sum of nutrition units stored undigested in the stomach and the weight of the flesh. The agent has a body temperature which is controlled by Temperature. Depending on the outer temperature, the activity rate, and the health condition, the temperature is lowered or raised. Two different types of communication channels are implemented in the rich agent's body: SlowMessenger and FastMessenger. The channel SlowMessenger is inspired by hormones. Several sources can emit a hormone which results in an increased aim value. The measurable value of the slow messenger is increased to this value fast. A slow decay rate lets the value converge towards zero. The resulting behavior is that for example several internal systems can emit the slow messenger signal $A$ and the internal sensor receives the current value. Thus, the control architecture cannot tell which source has emitted how much of this messenger and the effect of the emitting is present for some time. This is different with messages generated by the FastMessenger channel. It is inspired by the human nervous system. A distinct channel connects the emitter directly with the receiver. The emitted value exists as long as the source produces it. Health stores the information on the body integrity of the agent. In its simplest implementation, Health consists of a single value where //1// equals full health and //0// equals death. The health of the agent is influenced by external causes like being bumped or attacked as well as internal causes like poisonous food or a too high temperature.

These intra body interactions between different internal systems and the causes of external events like being attacked are processed by IntraBodySystem. In case of poisonous food, class DamageNutrition determines the severness of the poisoning. Possible results range from higher body temperature, color change, pain originating in the stomach to reduction of health. Another body related cause for health problems is a too high or too low body temperature. This is done by DamageTemperature. The task of BodyColor is to adapt the skin color of the agent to various internal states like health, temperature, and activity. In case the agent should have the ability to grow with time, this is done by Growth. It withdraws energy and nutrition from Stomach and adds them to Flesh and updates the MASON shape of the agent.

The last of the four systems introduced is InterBodyWorldSystem. It consists of the three components DamageBite, DamageLightning, and ConsumeFood. The first two respond to attacks by other entities and reduce health and add pain messages to the fast message system. The third one is invoked by another entity (entity //A//) in case it tries to eat this agent (entity //B//). If successful, the weight stored in //B//'s Flesh is reduced and the agent //A//'s stomach is increased by the corresponding amount. If //B// is still alive, pain and health reduction is performed similarly to the other two damage functions.

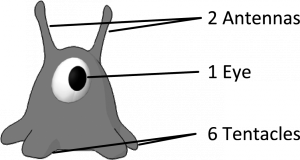

Figure \ref{fig:platform:arsin:arsin} shows the shape of the ARSIN agent. This alien form has been chosen deliberately. As explained in Section \ref{sec:intro:solution}, the body of the agent is only loosely inspired by nature and the human body. To avoid the trap of trying to emulate the human body as precisely as possible - which is not the aim of this research - a body shape not existing in nature has been chosen. It consists of a torso with six tentacles attached. They can be used for movement and for object manipulation. Further, the body consists of two antennas and a single eye. Every other aspect like ears or internal systems is not visible from the outside.

Additionally to the sensors and actuators of the simple body described previously, the rich body introduces several new internal and external sensors as well as possible actions. The eight added internal sensors are:

* [Health:] This sensor returns a value denoting the state of health the agent is in. * [Fast Messenger:] It generates a list of all currently emitted fast messenger signals. Each entry consists of the signal's source and its intensity. Not active sources have no corresponding entry in this list. * [Slow Messenger:] The list generated by this sensor contains all defined slow messenger types independent of their current value. For each type only its measured amount is listed. * [Stamina:] It returns a value corresponding to the available stamina as stored in the Stamina system. * [Heart Beat:] This one is linked to the Stamina system too. Other than the stamina sensor, this sensor returns a representation of the stamina reduction in the last step. Thus, in case of high activity of the agent and the resulting fast reduction of the available stamina, a high heart beat value is returned. * [Stomach:] This sensor returns the aggregated value of the available potential energy resembled by the various nutrition types and their amount stored in the stomach. The non-availability of an important nutrition has an above average impact on the resulting value. * [Stomach Tension:] It returns the normalized amount of nutrition units stored in the stomach. If a lot of units are in the stomach a high tension value is returned. This is independent of the case that a particular nutrition type is missing. * [Temperature:] A representation of the current body temperature is returned by this sensor.

Three additional external sensors are introduced. They enable feedback on the movement commands and add the sensing abilities needed for the below added manipulation actions.

* [Acceleration:] This sensor returns the direction and the forces of the last position change. * [Manipulateable Area:] Analogous to the eatable area sensor, this sensor is needed for the agent to detect which objects can be manipulated. * [Tactile:] The surface condition of the floor and of entities within the manipulation area can be perceived with this sensor.

Next to the five basic actions which allow the agent to roam the world and survive in it, several interactions are introduced with the rich body. Two different types of interaction groups exist: interaction with entities and interaction with other agents. Three actions related to the introduced internal systems are added too.

* [Say:] This action enables the agent to transmit symbols to other agents in the vicinity. Each symbol equals a concept like "I need help against an enemy." Thus, this concept bypasses language with its syntax and grammar. The energy consumed by execution of this action is proportional to the loudness used. Nevertheless, the resulting amount is still very low compared to the energy demand by the action move. * [Kiss:] A social interaction between two ARSIN agents. A small fraction of energy is needed for the execution of this action. * [Sleep:] Sets the agent to rest mode. Sensor sensitivity is turned down and no other actions are executed. This results in a low energy demand and can be used to regain stamina. Thus, execution of this action does not need additional energy. Although, internal systems like the stomach still need energy during sleep. * [Excrete:] Gets rid of indigestible nutrition stored in the stomach and produces a corresponding entity in the world. Execution of this action needs a small amount of energy. * [Body Color:] Change the color of the agent's skin. The values for red, green, and blue can be changed independently. This action needs no energy to be performed. * [Facial Expression:] Change the facial expression of the agent. The parts which can be changed by this action are the positions of the antennas, the size of the eye's pupil, and the shape of the pupil. To change the facial expression, only a small fraction of energy is needed. * [Pick Up:] This action allows the agent to manipulate entities. Depending on the weight of the entity and the strength of the agent, all mobile entities can be carried. Only objects within the manipulateable area can be picked up and only one object can be carried. The necessary energy to perform this action depends on the weight of the to be lifted entity. Once an entity is carried, additional energy to keep it is necessary each step. * [Drop:] This is the inversion action of "pick up." This action does not need any energy to be performed. * [To Inventory:] If the agent is equipped with an inventory, currently picked up entities can be moved to it (if there is still room left). The action itself does not consume any energy, and once an object is within the inventory less energy is needed to carry it. * [From Inventory:] This is the inversion action of "to inventory." It can be executed only if no other object is carried. This action does not need any energy to be performed. However, more energy is needed to carry the object retrieved from the inventory. * [Cultivate:] Currently carried plants can be cultivated. This special version of drop attaches the plant with the surface and - if everything worked as planned - the plant starts to grow. As this action is more complicated than just putting an object to the ground, it needs some energy to execute it.

The result of the introduction of the above listed internal systems, additional sensors, and more possible actions is an agent platform capable of acting within complex and social environments. The system is designed to be open to additional components. Thus, additional components can be added to the A-Life simulation to meet new requirements in case the focus is put to one of the later use-cases described in Section \ref{sec:platform:usecases}. The tools to measure and observe the internal components are introduced in the next subsection.

Inspectors

MASON offers a variety of inspectors to probe the internal states of the agent of interest. These inspectors can be used to view vital relevant information on each agent/entity during a simulation run. In combination with the graph drawing tool JGraphx [JGRAPHX2011], the following set of probes for bodily states have been implemented into the ARSIN world simulator:

* [Attributes:] This probe displays body attributes like facial expression and body color.

* [Fast Messengers:] It displays the list of the currently active fast message entries. Each entry consists of the triple: Source, destination, and intensity.

* [Flesh:] The current composition of the agent's flesh as described above.

* [Internal Energy Consumption:] This one displays a detailed list of the total energy consumption and the energy consumption for each component. The last ten steps are listed in full detail. Older steps are grouped and their average value is shown. This is an important tool to balance the energy demand of the various components.

* [Slow Messengers:] It displays a time line graph containing the values for all existing slow messengers.

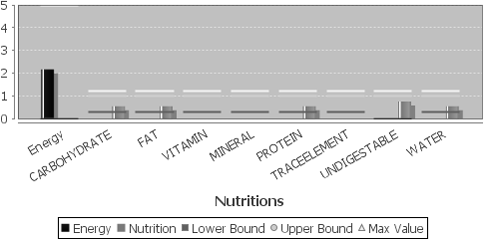

* [Stomach:] This inspector displays the various nutrition types the agent can process. A screenshot of this inspector is shown in Figure \ref{fig:platform:arsin:insp:stomach}. All other nutrition types are grouped to the column undigestible. The column on the left side depicts the potential energy available.

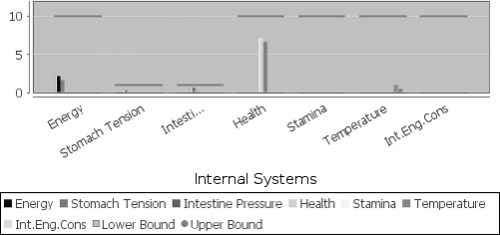

* [Internal Systems:] If the state of an internal system like stomach, health, or stamina can be represented by a single value, it is shown in the internal systems inspector (see Figure \ref{fig:platform:arsin:insp:internal}).

Next to these body state inspectors, special inspectors exist to probe the state of the various modules of the decision unit. They range from displaying the incoming sensor information, to the memory meshes processed in the current step, to the active wishes, and to a list of the last selected actions. The body state sensors are located in the project World, whereas the decision unit inspectors are located in the project DUInspectors.

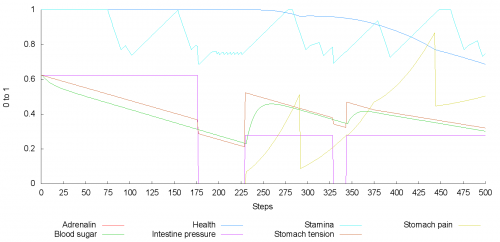

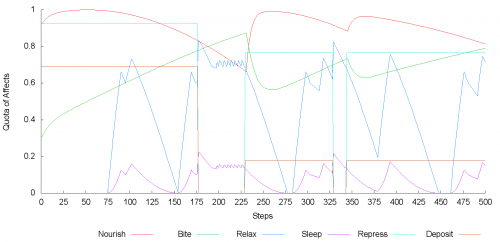

Two decision unit related inspectors are shown exemplarily. The inspector for E2 is shown in Figure \ref{fig:platform:arsin:insp:homeo}. This chart displays the change of the internal measures after they have been converted into processable symbols by the neurosymbolization. The y-axis is labeled with //0 to 1//. All homeostatic values represent something different. Thus, a more detailed label would occlude the graph. Nevertheless, the intensity value of each symbol is normalized to the range $0$ to $1$ by E2.

The second inspector shown (Figure \ref{fig:platform:arsin:insp:drive}) displays the results of Module E5 for the simulation steps $0$ to $500$. In each step, the results from E2 are processed by the modules E3, E4, and E5. After E5, the homeostatic symbols have been converted to drives represented by their pairs of opposites plus the attached quota of affects.

The data shown in Figure \ref{fig:platform:arsin:insp:drive} and Figure \ref{fig:platform:arsin:insp:homeo} is explained and discussed in the sections \ref{sec:results:stepbystep} and \ref{sec:results:impact}.

[Next: Decision Unit Framework]

<references />